What Do Large Language Models Know? Tacit Knowledge as a Potential Causal-Explanatory Structure

Abstract

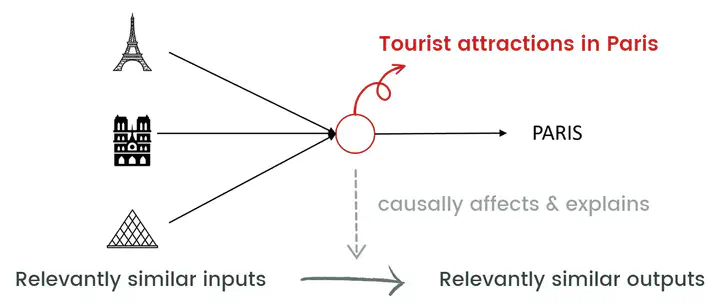

It is sometimes assumed that Large Language Models (LLMs) know language, or for example that they know that Paris is the capital of France. But what—if anything—do LLMs actually know? In this paper, I argue that LLMs can acquire tacit knowledge as defined by Martin Davies (1990). Whereas Davies himself denies that neural networks can acquire tacit knowledge, I demonstrate that certain architectural features of LLMs satisfy the constraints of semantic description, syntactic structure, and causal systematicity. Thus, tacit knowledge may serve as a conceptual framework for describing, explaining, and intervening on LLMs and their behavior.

Type

Publication

Philosophy of Science